The Optimizely Deployment API (Episerver.PaaS.EpiCloud) has helped immensely as I’ve been working on new projects, but there has always been something that has plagued me; COPYING DOWN CONTENT/ASSETS INTO MY LOCAL ENVIRONMENT.

When I say “Copying down Content,” I mean two pieces; Database and the Assets.

For the Database Export, I have created my ExportDatabase Script, which will utilize the API to generate a download file, and will also download the database to a local location.

Today, we will be talking about the hard part of this process; downloading Assets.

In this post, we will go through the following:

- What makes up “Content” in an Environment and Why would you need to copy it locally?

- What is Azure Blob Storage? How is it used in Optimizely?

- How to Download Assets (script)

- Conclusion

What is “Content” and Why copy it locally?

As I mentioned in the beginning of this article, when I talk about Content, I mean the two following things:

- Database – This is where all page/block property data is stored

- In DXP, this is an Azure Database

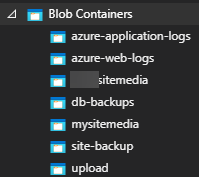

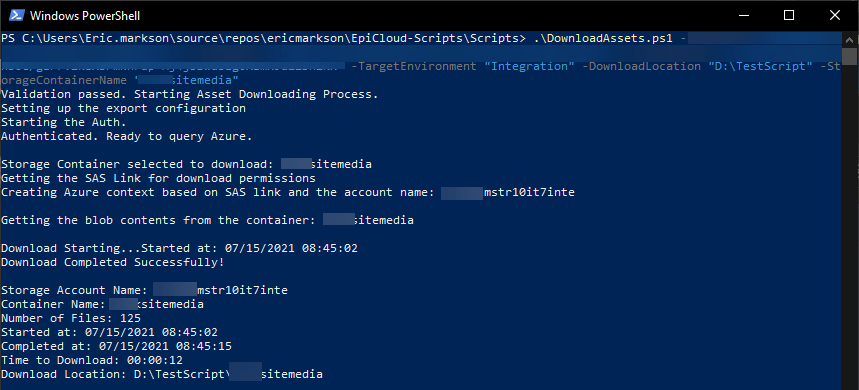

- Assets/Blobs – This is where all of the Images/Files are uploaded. It also stores Application Logs, Web Logs, Database backups

- In DXP, this is Azure Blob Storage

The reason for copying either/or of these locally is simply to bring the data to a lower, specifically Local, environment. This logic exists for “Promoting” an environment downwards using the script, but that cannot be used to bring anything outside of the DXP purview.

There have been a few scenarios where we have wanted data from one of our environments locally:

- Onboarding new employee – Content will get them ramped up really quick

- Bug in an upper environment – Mirrored content will help reproduce the issue…maybe it was how the content admin configured the page/block

- Broken Environment / Content Refresh – Something happen with your local environment? Pull data from a working environment!

Again, as I mentioned above, I already have a script that will download a database, which is really easy since it is a single file. Assets are not so lucky, as there can be hundreds, thousands, tens of thousands of files that need to be downloaded.

What is Azure Blob Storage? How is it used?

As defined by Microsoft, Azure Blob Storage is:

Blob storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that doesn’t adhere to a particular data model or definition, such as text or binary data.

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blobs-introduction

In other words, almost anything can be stored in Azure Blob Storage. The list that they also provide says that Blob storage is designed for:

- Serving images or documents directly to a browser.

- Storing files for distributed access.

- Streaming video and audio.

- Writing to log files.

- Storing data for backup and restore, disaster recovery, and archiving.

- Storing data for analysis by an on-premises or Azure-hosted service.

Optimizely, as a platform (cloud aside), utilizes Blob Storage for any file that is uploaded into it. Any image, video, pdf, etc…, which then gets directed to the Blob Storage location.

As mentioned above, it also has the capability from the platform to store Application Logs, Web Logs, and Database backups.

What is an SAS Token?

An SAS Token, or Shared Access Signature Token, is a way in which you can provide a secure method of giving access from within your storage account. This method is great because it allows very granular controls, such as:

- Specific resources that may be accessed

- What permissions they have for those resources (r/rw/etc..)

- How long the SAS is valid

Optimizely uses this to allow access to multiple files, such as when using the API to download a database (blog post here), and, in our case, access to a particular Blob Container to download all of the Blob files.

How to Download Assets

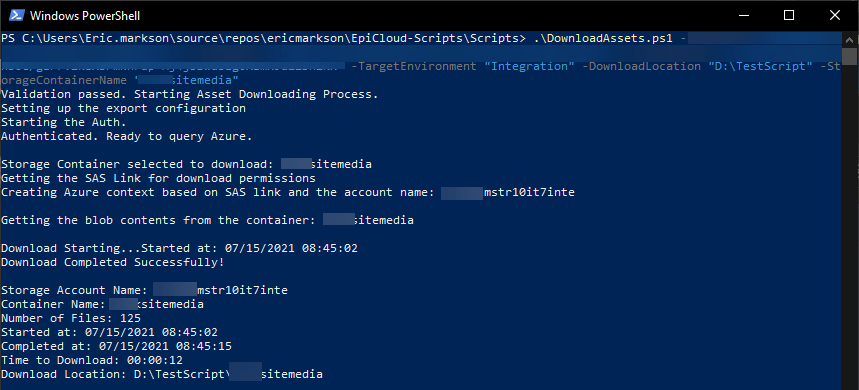

As of a couple weeks ago, I have officially pushed my newest script out to the Main branch of the OptiCloud Deployment Scripts. This script is called DownloadAssets.ps1.

This script has the following features:

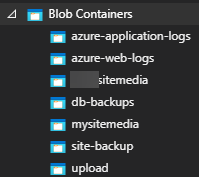

- Interactively Select Blob Containers

- If you do not know which container you really need (sometimes the developers name things oddly), just do not pass the StorageContainerName into the script, and the script will query Azure to help you find the one that you need. (Example in image below)

- Warning: This will not work in DevOps or any other environment that does not have an Interactive Shell. In these environments, it will find the container names for you, but you will have to re-run the script with the name passed in.

- Manually select how long you want the SAS Token to be valid for

- I currently have projects that have over 65,000 assets in them, but I know other clients with double or triple that. The default time is 5 hours, but please feel free to override that based on how many files you have. (You can even cancel it and restart it with a longer expiration time if you find the Estimated Completion time is longer than the time that you have provided)

- Download Assets to a custom Location

- As the main point of this script, you can download all of the blobs from the selected container to the custom location that is passed into the script

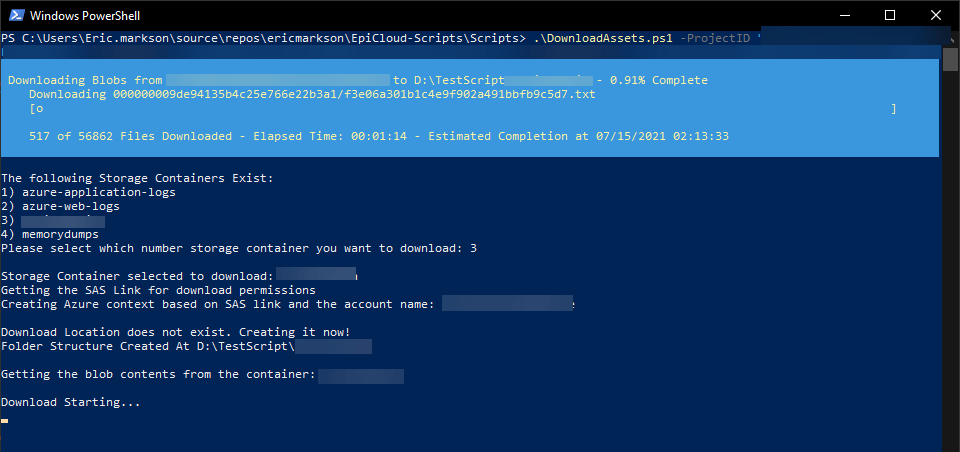

- Statistics during and after download – The script will provide the following:

- Number of files (How many has been downloaded and how many remaining)

- Elapsed Time

- Estimated Completion

- Download Duration (After all files successfully downloaded)

Now….The important part. HOW DO I START THIS THING?!

.\DownloadAssets.ps1 -ProjectID "*******"

-ClientKey "*******"

-ClientSecret "*******"

-TargetEnvironment "Integration"

-DownloadLocation "D:\TestScript"

-StorageContainerName "mysitemedia"

Conclusion

This has got to be one of my favorite, and honestly, one of the most complex scripts in my library. It is absolutely one of the most useful, as well.

There are so many uses for this script, but I am going to primarily use this for copying files locally (yay content sync downs), and getting new developers ramped up to existing projects.

As always, please let me know if you have any comments/questions/concerns. I am more than happy to help!